AI for hearing assistance: deep learning with HeardThat at CES

The HeardThat team recently wrapped up a fun few days at the CES 2021 all-virtual experience. Per usual, accessibility technology was a hot topic. Hearing tech in particular continues to see advancements, which isn’t surprising considering one in eight Americans over 12 years of age or older have hearing loss in both ears.

As we spoke with media, potential HeardThat users, and other companies, there was a lot of interest in how HeardThat’s use of AI is related to what others are talking about in the hearing space. Terms like AI, machine learning, and deep learning are applied to a broad range of applications and features. In this blog, we’ll try to provide some clarity.

AI and the other terms have become popular. Basically, they refer to any technique that automatically creates or improves solutions based on experience rather than being explicitly programmed.

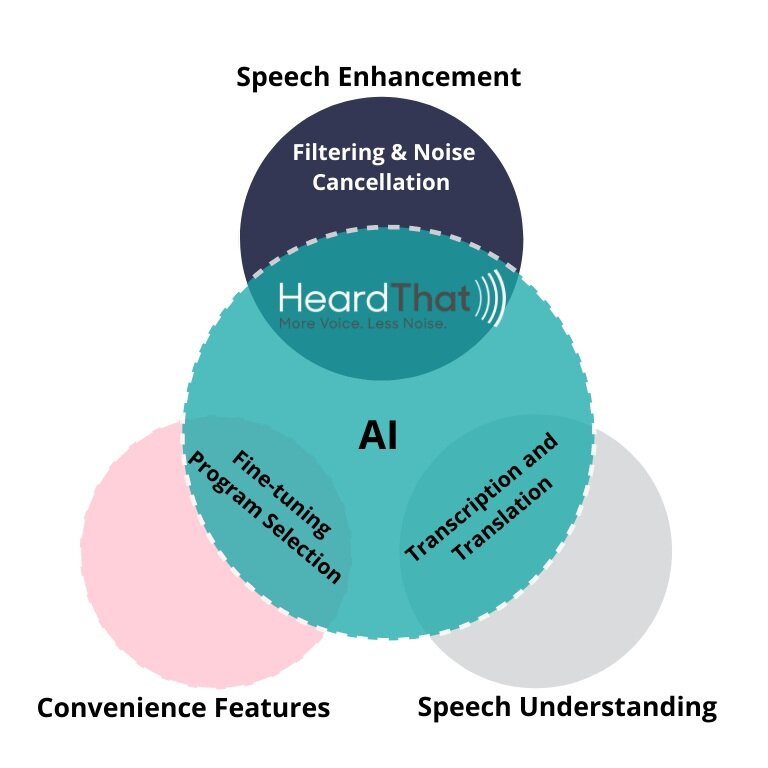

This diagram shows some of the uses for AI and how what HeardThat is doing fits in.

How does HeardThat use AI and machine learning?

Machine learning is a subfield of AI, and HeardThat uses a specific type of machine learning, called deep learning to provide a unique solution for speech enhancement based on separating speech from noise.

For context, the modern era of deep learning is generally considered to have started around 2012 when it provided a major breakthrough in image classification. In subsequent years, this was followed by applications such as speech recognition, machine translation, and speech separation.

Only very recently, deep learning has been applied to speech enhancement, which is the goal of HeardThat. The team trained deep learning models with thousands of hours of speech, noise, and mixtures of the two so that the app learned the difference between them and how to separate speech from noise.

One hears about AI in other aspects of hearing and speech processing, as shown in the diagram. There are many forms of machine learning and they aren’t all comparable.This is because they each tackle different problems. Just because AI is used to solve one problem, it doesn’t mean that all possible problems have been solved.

It’s not the technique that matters so much as whether a more effective solution has been enabled by the technique. In the case of speech and noise, deep learning has enabled more progress recently than the world has seen for many years on this important problem.

If you haven’t tried it yet, HeardThat is available for download for iOS at the Apple App Store and Android at the Google Play Store, free for a limited time.

For further questions on the tech behind HeardThat, please visit www.heardthatapp.com/contact or email info@singularhearing.com.